Differentiation

Differentiation[edit]

The notion of the derivative of a function or differentiability originates from the concept of approximating a function near a given point using the "best" linear approximation. This approximation, if it exists, is unique and is given by the line that is tangent to the function at the given point , and the slope of the line is the derivative of the function at .

A function is differentiable at if the limit

exists. This limit is known as the derivative of at , and the function , possibly defined on only a subset of , is the derivative (or derivative function) of . If the derivative exists everywhere, the function is said to be differentiable.

As a simple consequence of the definition, is continuous at if it is differentiable there. Differentiability is therefore a stronger regularity condition (condition describing the "smoothness" of a function) than continuity, and it is possible for a function to be continuous on the entire real line but not differentiable anywhere (see Weierstrass's nowhere differentiable continuous function). It is possible to discuss the existence of higher-order derivatives as well, by finding the derivative of a derivative function, and so on.

One can classify functions by their differentiability class. The class (sometimes to indicate the interval of applicability) consists of all continuous functions. The class consists of all differentiable functions whose derivative is continuous; such functions are called continuously differentiable. Thus, a function is exactly a function whose derivative exists and is of class . In general, the classes can be defined recursively by declaring to be the set of all continuous functions and declaring for any positive integer to be the set of all differentiable functions whose derivative is in . In particular, is contained in for every , and there are examples to show that this containment is strict. Class is the intersection of the sets as varies over the non-negative integers, and the members of this class are known as the smooth functions. Class consists of all analytic functions, and is strictly contained in (see bump function for a smooth function that is not analytic).

Series[edit]

A series formalizes the imprecise notion of taking the sum of an endless sequence of numbers. The idea that taking the sum of an "infinite" number of terms can lead to a finite result was counterintuitive to the ancient Greeks and led to the formulation of a number of paradoxes by Zeno and other philosophers. The modern notion of assigning a value to a series avoids dealing with the ill-defined notion of adding an "infinite" number of terms. Instead, the finite sum of the first terms of the sequence, known as a partial sum, is considered, and the concept of a limit is applied to the sequence of partial sums as grows without bound. The series is assigned the value of this limit, if it exists.

Given an (infinite) sequence , we can define an associated series as the formal mathematical object , sometimes simply written as . The partial sums of a series are the numbers . A series is said to be convergent if the sequence consisting of its partial sums, , is convergent; otherwise it is divergent. The sum of a convergent series is defined as the number .

The word "sum" is used here in a metaphorical sense as a shorthand for taking the limit of a sequence of partial sums and should not be interpreted as simply "adding" an infinite number of terms. For instance, in contrast to the behavior of finite sums, rearranging the terms of an infinite series may result in convergence to a different number (see the article on the Riemann rearrangement theorem for further discussion).

An example of a convergent series is a geometric series which forms the basis of one of Zeno's famous paradoxes:

In contrast, the harmonic series has been known since the Middle Ages to be a divergent series:

(Here, "" is merely a notational convention to indicate that the partial sums of the series grow without bound.)

A series is said to converge absolutely if is convergent. A convergent series for which diverges is said to converge non-absolutely.[6] It is easily shown that absolute convergence of a series implies its convergence. On the other hand, an example of a series that converges non-absolutely is

Taylor series[edit]

The Taylor series of a real or complex-valued function ƒ(x) that is infinitely differentiable at a real or complex number a is the power series

which can be written in the more compact sigma notation as

where n! denotes the factorial of n and ƒ (n)(a) denotes the nth derivative of ƒ evaluated at the point a. The derivative of order zero ƒ is defined to be ƒ itself and (x − a)0 and 0! are both defined to be 1. In the case that a = 0, the series is also called a Maclaurin series.

A Taylor series of f about point a may diverge, converge at only the point a, converge for all x such that (the largest such R for which convergence is guaranteed is called the radius of convergence), or converge on the entire real line. Even a converging Taylor series may converge to a value different from the value of the function at that point. If the Taylor series at a point has a nonzero radius of convergence, and sums to the function in the disc of convergence, then the function is analytic. The analytic functions have many fundamental properties. In particular, an analytic function of a real variable extends naturally to a function of a complex variable. It is in this way that the exponential function, the logarithm, the trigonometric functions and their inverses are extended to functions of a complex variable.

Fourier series[edit]

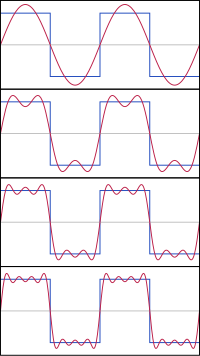

Fourier series decomposes periodic functions or periodic signals into the sum of a (possibly infinite) set of simple oscillating functions, namely sines and cosines (or complex exponentials). The study of Fourier series typically occurs and is handled within the branch mathematics > mathematical analysis > Fourier analysis.

Integration[edit]

Integration is a formalization of the problem of finding the area bound by a curve and the related problems of determining the length of a curve or volume enclosed by a surface. The basic strategy to solving problems of this type was known to the ancient Greeks and Chinese, and was known as the method of exhaustion. Generally speaking, the desired area is bounded from above and below, respectively, by increasingly accurate circumscribing and inscribing polygonal approximations whose exact areas can be computed. By considering approximations consisting of a larger and larger ("infinite") number of smaller and smaller ("infinitesimal") pieces, the area bound by the curve can be deduced, as the upper and lower bounds defined by the approximations converge around a common value.

The spirit of this basic strategy can easily be seen in the definition of the Riemann integral, in which the integral is said to exist if upper and lower Riemann (or Darboux) sums converge to a common value as thinner and thinner rectangular slices ("refinements") are considered. Though the machinery used to define it is much more elaborate compared to the Riemann integral, the Lebesgue integral was defined with similar basic ideas in mind. Compared to the Riemann integral, the more sophisticated Lebesgue integral allows area (or length, volume, etc.; termed a "measure" in general) to be defined and computed for much more complicated and irregular subsets of Euclidean space, although there still exist "non-measurable" subsets for which an area cannot be assigned.

![{\displaystyle C^{0}([a,b])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f00d6ce898742eecd34c015f572eb993d854ee65)

can be defined

can be defined

Comments

Post a Comment